5 Safety critical programming

5.1 Safety critical development

The previous chapter examined the working process of a small computer game company. Using concepts from hermeneutical theory, we found that the programming work in this case was easily understood as a process of understanding. By contrast, the mainstream theories – software engineering, Agile development, and computer science – proved to be less useful. The conclusion drawn by the analysis was that the mainstream theories are not well suited as general theories of programming.

In this chapter we will take a look at some programming processes where software engineering theory is prominent and used in practice: namely, safety critical programming. We will do this for two reasons. First, investigating programming practices that take place in very different circumstances to a small game company will deepen our understanding of how programming works. Second, from a cultural perspective, it is not enough to criticize the mainstream theories on the basis of one example. We must also be able to explain what the theories are actually useful for and why they look the way they do. In this respect, the software engineering theories are the most interesting to examine. As explained in Chapters 3 (Programming theories) and 4 (Game programming), Agile development is very close to ordinary programming practice, and computer science theory is not primarily concerned with work practices. Software engineering, on the other hand, is oriented toward work processes and, at the same time, quite different from the way programmers organize themselves if left to their own devices.

The term “safety critical” applies to products and areas in which malfunctions can result in people being injured or killed, or in which errors are extremely expensive.1 Safety critical products are products such as cars, aeroplanes, and hospital equipment; and safety critical industries include nuclear power plants and chemical processing plants. The software involved in safety critical applications is often control software for machines or products, such as the software that controls the brakes in a modern car, or the software that controls the valves in an industrial plant.

The safety critical area is regulated by a large number of standards, depending on the particular industry or geographical area. The most important standard in Europe currently is the IEC 61508 “Functional safety of electrical/electronic/programmable electronic safety-related systems”.2 The IEC 61508 is a general standard, with a number of derived standards that apply to specific industries. The standards are often backed by legal requirements in national courts. On other occasions, the only requirements that apply are the ones that industry associations agree upon among themselves. In any case, the standards are most often verified by separate verification agencies, such as the German TÜV, or the German-American Exida, which perform the verifications for a fee for their industry customers.

1 These are also called “mission critical” areas.

5.2 A large avionics company

Before we discuss safety critical programming processes in general, we will take a look at a couple of specific examples of processes. First is the way work is done at a software department in the company Cassidian. The following description is based on an interview with two engineers who work there and thus represent the employees’ opinion of their work.1 The company makes components for military aircraft such as the Airbus A400M, shown in Figure 5.1. Cassidian is a part of the holding company EADS, which is an international European aerospace and defence company with around 110,000 employees – more than a small city in size. Cassidian itself has around 31,000 employees. The most well known EADS products are the Airbus aircraft.

A typical software department in Cassidian will be a sub-department of an equipment level department, a department that works on coordinating the efforts of creating some piece of equipment for the aircraft. The equipment level department might have, for example, around 30 system engineers and four sub-departments. Two of the sub-departments are hardware departments, with around 25 engineers each. The two others are software departments with around 20 software engineers each – one department for resident software (embedded software) and one for application software.

Besides the software departments that do the actual software work, and the equipment level department that gives the software department its requirements, other departments are involved in the work. The quality department contains some sub-departments that observe the software development process and modify it based on feedback from lower-level departments. The safety department is involved in making sure that the work fulfils the safety standards. Both the quality department and the safety department are independent departments within Cassidian. The quality department is independent because this is required by the safety standards; the safety department is not required to be independent, but it has been found very helpful to have it that way.

The general working process at Cassidian is an engineering process. The first phase is requirements engineering, in which it is decided what the piece of equipment is going to do. This is followed by conceptual design and detailed design. Then comes implementation – the actual making of the equipment – followed by the verification phase, which checks whether the equipment actually fulfils the requirements from the first phase.

The work process is standardized internally in Cassidian, but it is tailored to each project. Before a project is started, a team defines the tailoring of the process that will be used. This team consists of high-ranking managers that represent different interests: the project’s financial officer, the quality manager, the configuration manager, the project responsible for engineering, and people representing logistics support, tests, and production. Sometimes even the head of Cassidian is involved in the project team’s decisions. The reason that so many important people are involved is that the projects are big and costly and therefore carry a lot of responsibility, with budgets of between 5 and 10 million Euros. In addition to determining the process tailoring of a project, the project team also carries out risk assessment, risk analysis, and feasibility studies; assesses financial feasibility; and predicts marketing consequences.

When the project has been approved by the project team, the engineering process can begin. The higher level system requirements are broken down to unit level requirements, and separated into basic hardware and software requirements. This makes up the equipment architecture. After the architecture has been defined, the unit activities start. Each unit prepares its own requirements paperwork and works out a unit architecture. After this the unit requirements are validated, if required. This is followed by the actual implementation of the unit, after which comes informal testing to weed out errors. Once the unit is ready, the various hardware and software units are integrated and formally tested. When all units have been integrated and tested, the final product is complete.

When, in the engineering process, the system level architecture has been defined, it has at the same time been decided which parts of the system are to be created in hardware and which in software. The software departments can then start their work, which follows the software development process. This process starts with writing a software requirements specification, which is reviewed by the system department, the hardware department, and members of the software department. The review includes validation of the software requirements to make sure that they are in accordance with the system requirements and hardware requirements. Sometimes, if the piece of equipment is meant to stand alone, the validation is omitted to save on the workload and cost, but for systems that go into aircraft the full program is always required.

After the software requirements specification has been written and reviewed, the conceptual design phase takes place. In this phase, the designers tend to arrive at a conceptual design they think will work, and try it out in some way. The feedback they get from this reveals problems in the conceptual design, which can then be corrected. The correction happens in the form of derived requirements, which the designers impose in addition to the system requirements from the requirements specification phase. The system department then verifies the derived requirements, because the derived requirements are not a part of the high-level system requirements that have been agreed upon.

After the conceptual design comes the detailed design phase, which consists mainly of generating the C code of the programs, compiling it to machine code, and finally implementation. But here, implementation does not mean coding, as is usual in programming. Rather, it means transferring the programs to run on a piece of hardware, which will often be some kind of test hardware, and then conducting some trials and tests on the newly-transferred software. After the detailed design phase comes integration of software and hardware, this time not with test hardware but with the hardware that has been developed by the hardware departments at the same time as the software departments wrote the programs. Finally, there is verification of the whole integrated system.

Because of the derived requirements, there is close cooperation throughout the development between the software, hardware, and systems departments. The derived requirements are always assessed by the independent safety department.

Equipment units can be qualified and certified according to standards. All units are qualified, but not all are certified. Each software or hardware unit does its own verification and qualification, meaning that it prepares its own qualification specification and qualification procedures. For a unit to be certified, it is necessary to convince a body of authority outside Cassidian that the unit and the development procedures meet the relevant standard. In order to do that, evidence of each step in the development process must be recorded so that it can be shown later that the step has been carried out correctly. This can, for example, be evidence connected with phase transition criteria, such as the outcome of a phase; it can also be evidence that certain rules have been followed, for example that certain forbidden structures are not found in the C code. The verification of requirements is a part of the evidence gathering process. There are three main ways of showing that requirements are covered: tests, analysis, and reviews. Tests provide the strongest evidence, but it can be difficult to cover a high enough percentage of the requirements with tests.

A number of software tools are used in Cassidian to help with the development process. IBM Rational DOORS is a requirements management program that is used to keep track of the large number of requirements in a project. The derived requirements of a subsystem need to be linked to the higher level system requirements. All the requirements also need to be linked to the tests that verify that they are satisfied, and all links need to be updated at all times. Aside from the requirements management software, a code checker is run regularly on the C code to check that it conforms to the style guides. This is required by the standards.

At Cassidian, experienced colleagues act as coaches for new and less experienced colleagues. The coaches help their colleagues with using the software tools, and with following the work processes. In addition, there are internal training programs where necessary; for example, if an engineer is promoted to systems engineer he will participate in a training program explaining his new responsibilities. Regardless, the day-to-day work is the most important way of educating the engineers, as at least 80 per cent of the understanding needed to work with the avionics standards depends on everyday experience.3

The work processes at Cassidian are defined in the internal company standard, FlyXT. This standard is a synthesis of a number of other standards. On the system level, it originally builds on the V-model, a government model that was taken over from the military administration department Bundesamt für Wehrtechnik und Beschaf-fung. FlyXT completely covers two avionics standards: DO178B and DO254. DO178B applies only to the software parts of aircraft and DO254 applies only to the hardware. Together, they are the standards that are most usually applied to aircraft production. The software development process at Cassidian follows the DO178B standard as it is expressed in the FlyXT standard. At the beginning of each project, the process steps are then copied to the project’s handbook or referenced from it, according to the wish of the project leader.

After each project is completed, an effort is made to record the lessons learned and incorporate them in the company standard. However, it is a big company, and whether this actually happens depends on the workload and on the people involved in the process. The top-level management wants to make the company as a whole comply to the process maturity model CMMI level 3. A process maturity model is a standard that is not particular to safety critical development: it sets some requirements to a company’s paperwork processes, especially that they be well documented and consistent. CMMI compliance is an attempt by management to record systematically and standardize all processes in the company. The goal of this is to get rid of all tailoring of the process, so that the projects always follow the company standard without modifications. This goal is linked to a wish in Cassidian to make FlyXT the only standard in use by the company. Since Cassidian works as a subcontractor, the customers sometimes impose their own choice of standard on the work process. The ambition is to be able to say that FlyXT covers every avionics standard so that there is never any need to work with other standards. As part of this goal, the company strives to have reproducible process steps for all projects.

1 Interview 24. For a detailed discussion of my data gathering methods see Suenson 2013.

2 Licensed under the Creative Commons Attribution 4.0 International licence.

https://commons.wikimedia.org/wiki/File:Airbus_A400M_EC-404_ILA_2012_05.jpg

3 See quote (page for section 5.5).

5.3 A small farming systems company

The process at Cassidian is in many ways typical of the way in which software is developed in large companies in safety critical industries. However, not all safety critical software is developed by large companies or in typical settings. For a different example, we will take a look at how work is done in a small, Danish company named Skov. The description is based on an interview with the engineer who is in charge of software methods and tools.1

Skov makes automated equipment for animal production, primarily for swine and chicken. One area of business is ventilation and heating systems for animal stables. Another is production control systems, for controlling feeding, water dispensation, and lights. Minor areas of business include air purification systems and sensors for use in animal stables.

Skov has around 300 employees, of which 45 are employed in the development department. Of these, around 20 are software developers. Ten software developers work with ventilation systems, 10 with production control systems, and one with sensors. Most of the software made is control software for the systems that Skov sells, meaning that it is embedded software.

Skov considers its software development process to be nothing out of the ordinary compared to other small Danish companies. Most of the process is very informal, and it is not written down. The developers follow an Agile process where the work is planned in relatively short periods of a month or so, called Sprints.2 Much of the planning is also done from day to day. Every morning there is a short standing Scrum meeting where the day’s work is discussed, and before each project starts, developers, project managers and department managers have an informal discussion about the course of the project. The Agile process was adopted in the company three years ago after a trial Agile project.

The developers make an effort to include the customer throughout the development process. The software customers are typically internal Skov customers, though occasionally there is an external customer. The close collaboration with customers during software development is something the company has always practiced. The basis for the software development is a written requirement specification, and occasionally a design specification is written too, though neither document is at all formalized.

The software for Skov’s controllers is coded in UML, a graphical notation for software models. The models are then automatically translated to C code, which can run on the hardware. The company uses the commercial software tool Rhapsody for translating the UML models: this is used with almost no custom modification. The UML-based way of working is well suited to Skov’s products, which from a software perspective consist of a few main products that are sold in many slightly different variants under different trade names.

Because of the safety critical nature of Skov’s products, the testing process is very well developed. The tests are fully automated and take place in Skov’s test centre, where the software is tested against its specifications and in a variety of application situations. The testing procedures are thorough and formalized. In addition to ordinary tests, the company also performs so-called service tests, where customers test the company’s products; and there are stable tests, where the products are tested in real environments.

The safety critical aspects of Skov’s systems are mostly connected to ventilation. If the ventilation fails in a modern animal stable, the animals can suffocate in a few minutes. A stable filled with hundreds or thousands of dead animals would be catastrophic and reflect very poorly on the company’s reputation.

There are no formal requirements for certification of processes or products in the animal production industry. Likewise, there are no standards within the industry. Skov is very careful with its testing, not because of any external requirements, but in order to avoid bad publicity and a reputation for unsafe products. It strives to attain a high degree of safety while at the same time, its people “do not produce documentation for the sake of documentation”.3

Skov considers itself to be a developer of total systems, not of components, and that identity also influences the perception of software development in the company. From the company’s point of view the absence of formal industry standards is an advantage, because it is possible to come up with innovative solutions and products without risking conflict with existing standards. The only standards that exist are the traditions in the markets. Each market has its own distinct cultural tradition, and there is a big difference between what the United States markets expect of a product, and what the European markets expect.

Besides its own Agile software development process, Skov has an ambition to conform to the process maturity model CMMI level 2 (a lower stage of compliance than level 3). This ambition is held by Skov’s management and, as such, the introduction of CMMI has been a top-down process. By contrast, the Agile development method has been introduced as a bottom-up process, in that the software developers themselves desired and introduced the method with the consent of management. CMMI and the Agile method have never been in conflict in Skov: they appear to coexist peacefully within the company without much conscious effort.

The introduction of Agile methods did not in any way revolutionize the development process at Skov. When the company became curious with regard to Agile methods and decided to try them out, it discovered that it was already very agile in its approach, so the introduction did not add or change a great deal. In Skov’s opinion, constant testing is part of what makes a process Agile. This corresponds well with Skov’s process, which emphasizes the need for automatic, reproducible tests during the whole process.

Until a few years ago, Skov did not even use the phrase “safety critical” to describe itself. The company just said that it had to ensure that its products did not kill animals. However, in the past few years the company has become interested in finding out of how much it has in common with the more formalized safety critical industries, as it has grown in size. This has been an organic growth without drastic changes in process, meaning that the company has always been able to retain the experience that have been accumulated among the employees.

The company’s processes in general are primarily based on the experience of the participants. This is also true of the critical test process, which is not based on formal certification but on a slow growth of experience and adjustments.

5.4 The safety standard

Having looked at the actual safety critical development processes of two different companies, we will now focus on one of the safety critical standards that governs most of safety critical development, in order to get an impression of what they are like and what it requires to work with the standards. It is necessary to go into some detail regarding the standard, but the reader should not be discouraged if its technicalities seem overwhelming – the aim is solely to give a taste of what the standard is like. Bear in mind that even for experts in the field, it will take years to become thoroughly familiar with a standard such as IEC 61508.

As mentioned, the IEC 61508 is the most important safety critical standard, at least in Europe. It is a document numbering 597 pages, in seven parts, which governs all of the safety critical development process in general terms not specific to any particular industry.1 Part 3 – “Software requirements” – chiefly governs software development.

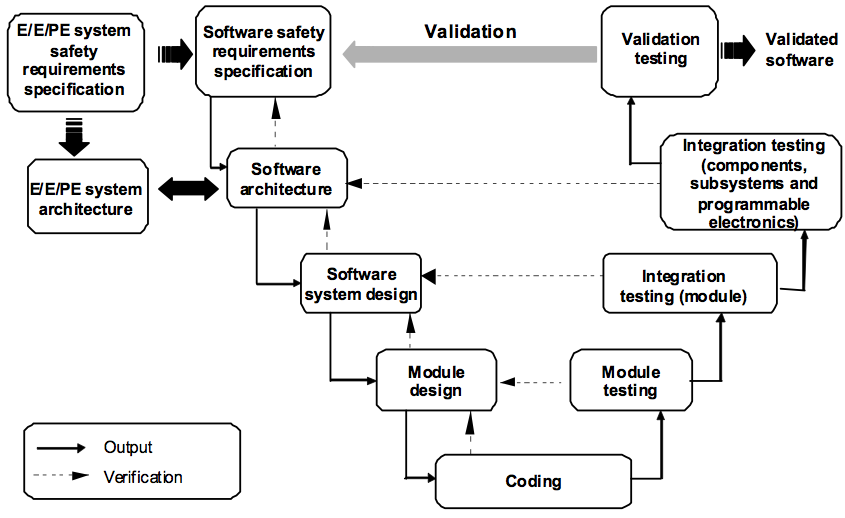

Figure 5.3 – “Software systematic capability and the development lifecycle (the V-model)”. IEC 61508-3. International Electrotechnical Commission 2009.

The standard specifies how software development should be done. Figure 5.3 shows the model for software development taken from IEC 61508-3 Figure 6, the V-model. This model was also mentioned in Section 3.1.3 (Process thinking); it takes its name from its shape.

In principle, the choice of development model is free, since the standard states:

“7.1.2.2 Any software lifecycle model may be used provided all the objectives and requirements of this clause are met.”

However, in practice, the choice of model is severely limited, since it essentially has to perform the same function as the V-model. The next paragraph of the standard specifies that the chosen model has to conform to the document-oriented software engineering models described in Section 3.1.3:

“7.1.2.3 Each phase of the software safety lifecycle shall be divided into elementary activities with the scope, inputs and outputs specified for each phase.”

Let us take a closer look at a typical paragraph of the standard:

“7.4.4.4 All off-line support tools in classes T2 and T3 shall have a specification or product manual which clearly defines the behaviour of the tool and any instructions or constraints on its use. See 7.1.2 for software development lifecycle requirements, and 3.2.11 of IEC 61508-4 for categories of software off-line support tool.

NOTE This ‘specification or product manual’ is not a compliant item safety manual (see Annex D of 61508-2 and also of this standard) for the tool itself. The concept of compliant item safety manual relates only to a pre-existing element that is incorporated into the executable safety related system. Where a pre-existing element has been generated by a T3 tool and then incorporated into the executable safety related system, then any relevant information from the tool’s ‘specification or product manual’ should be included in the compliant item safety manual that makes possible an assessment of the integrity of a specific safety function that depends wholly or partly on the incorporated element.”

Off-line support tools refers to the programs the developers use to make the safety critical software, such as editors, compilers, and analysis software. Categories T2 and T3 are those programs which can directly or indirectly influence the safety critical software; the categories are defined in sub-clause 3.2.11 of part 4 of the standard, as stated. Thus, paragraph 7.4.4.4 states that all programs that are used to make safety critical software, and which can directly or indirectly influence the software, have to have some documentation of the programs’ function and use.

The reference to sub-clause 7.1.2 is probably intended to indicate where among the overall safety development phases the activities connected with paragraph 7.4.4.4 have their place. The software phases and their corresponding sub-clauses and paragraphs are listed in table 1 of IEC 61508-3, which is referenced from sub-clause 7.1.2.

The note to paragraph 7.4.4.4 makes it clear that the documentation required for programs used to make safety critical software is not the same as that required for the safety critical software itself. It also points out that if a program is used to make something that ends up in the safety critical software, then the relevant bits of the program’s documentation should be copied over into the safety critical documentation.

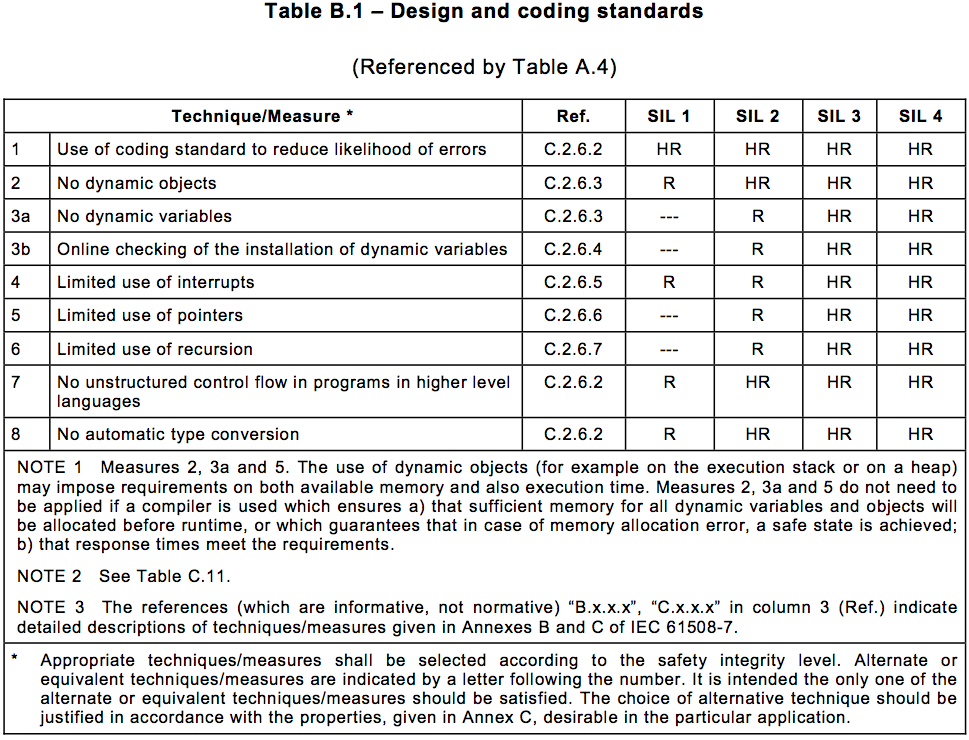

Figure 5.4 – Table B.1 “Design and coding standards” from IEC 61508-3. International Electrotechnical Commission 2009.

A sizeable portion of the standard is made up of tables. In part 3 alone there are 43 tables, some spanning multiple pages. An example of such a table is shown in Figure 5.4. The table shows recommendations for design and coding standards, that is, guidelines for how to write the computer code. From top to bottom, the table lists some different techniques that can be used during coding. They are mainly prohibitions on using certain forms of code that are considered unsafe, such as, for example, automatic type conversion.2 To the left of each technique are listed the safety levels from SIL (Safety Integrity Level) 1 to SIL 4. SIL 1 is the lowest level of safety. For each level and each technique, it is noted whether that particular technique is recommended, “R”, or highly recommended, “HR”, for the safety level in question. For safety levels 3 and 4, all the techniques are highly recommended.

Since the table lists recommendations, the safety critical software is not absolutely required to use the suggested techniques. However, it must still fulfil the standard; so if it does not use a particular recommended technique, a good explanation is expected for why, and how the standard’s requirements are nevertheless still fulfilled. This goes especially for the highly recommended techniques. Thus, while a company is in principle free to develop its safety critical software as it likes, in practice it must take into account that the more it deviates from the standard’s recommendations, the more it must be prepared to argue, prove, and provide evidence for its own solution. As such, in terms of paperwork the easiest thing might well be simply to follow the standard’s recommendations without question.

1 International Electrotechnical Commission 2009.

2 A programming language technique which some programmers find more convenient, but which also carries the risk of allowing serious errors to go undetected.

5.5 Hermeneutical analysis

After discussing the realities of safety critical programming, we will turn to a hermeneutical analysis of safety critical programming, in parallel with the hermeneutical analysis of game programming at Tribeflame in Section 4.2. The purpose is to show how hermeneutical theory can be applied in a concrete case. In the case of safety critical programming, the analysis is conceptually more difficult than that provided in Chapter 4 because we are not analysing a single company’s concrete work process, but rather the role that safety critical standards play in the work processes of different kinds of companies. To make matters even more complicated, we are not even analysing a concrete safety standard as such, but rather the idea of using a standard in safety critical programming. In other words, we will analyse safety critical standards as a cultural form.1 The analysis is primarily based on 24 interviews that I conducted in connection with a pan-European research project about reducing the costs of certification, mostly according to IEC 61508. The project was sponsored by the European Union, and universities and companies from different safety critical industries participated. The interviews are summarized in the list of source material. For a short introduction to hermeneutical analysis, see Section 4.2.1 (Short introduction to hermeneutics); for a more thorough explanation, see Chapter 9 (Understanding).

The first step in understanding safety standards is pre-understanding; that is, those fundamental skills such as reading and writing that are a prerequisite for beginning the process of understanding. In the field of safety standards, those fundamentals are: to understand engineering terms; to understand software engineering terms; and to have some knowledge of programming techniques, which is necessary in order to understand, for example, the table presented in Figure 5.4. In addition, it is necessary to be able to understand the kind of documents that IEC 61508 represents, and which is exemplified above by paragraphs 7.1.2.2, 7.1.2.3, and 7.4.4.4 of this standard. It is a lawyer-like language of numbered clauses, sub-clauses and paragraphs, full of references and abbreviations, which uses awkward sentence structures and many uncommon words with precise definitions. It is perhaps not a daunting task for a trained engineer to learn this, but many other people would find it challenging.

The prejudice inherent in safety standards are those assumptions that are necessary in order to make sense of them. The certification company Exida has published a book about the IEC 61508 that contains the following characterization of the standard:

“Many designers consider most of the requirements of IEC 61508 to be classical, common sense practices that come directly from prior quality standards and general software engineering practices.” 2

It is undoubtedly the case that most of the IEC 61508 is common sense in safety engineering, but what is interesting here is that the safety critical notion of “common sense” is a very specific one, limited to a narrow range of situations. For example, the table in Figure 5.4 essentially recommends that the programming language technique of dynamic memory allocation should not be used at all (entries 2 and 3a). This is a good idea in a safety critical system, but in most other forms of programming, including game programming, it would not be common sense to program without dynamic memory allocation: arguably, it would be madness.3

What, then, are the assumptions needed to make sense of safety critical standards? First of all that safety is an engineering problem that can be approached with engineering methods and solved satisfactorily in that way. Closely connected with this assumption is the notion that difficult problems can be broken down into simpler problems that can be solved separately, and that the solutions can be combined to form a solution to the original problem. A third, and also closely connected, assumption is that both process and problem can be kept under control, using the right approaches. All three assumptions are also found in the software engineering models described in Section 3.1.3 (Process thinking).

Personal reverence is a foundation of understanding. A person interpreting a text or a practice must have some kind of personal attachment to the thing being interpreted. In Tribeflame, we saw that the personal reverence mainly took the form of personal relations between actual people; but personal reverence can also take the form of, for example, reverence for the author of a text. The safety standards do not depend on personal relationships; they are impersonal. Nor is there any particular reverence for the authors of the standards; they are anonymous or hidden among the other members of committees. To where, then, is the personal reverence directed in safety critical programming? The programmers revere neither colleagues nor authors, but instead they seem to revere the system of safety standards itself, as an institution.

The reverence associated with safety standards is probably a case of reverence towards a professional identity and code of conduct. What programmers revere in the standards is their professionalism, rather than the standards themselves. This is a consequence of their bureaucratic character. According to Weber, modern bureaucracies replace personal loyalty with loyalty to an impersonal, professional cause.4 The prerequisite for this shift in loyalty is that professionals have a long and intensive education,5 which provides them with the necessary training and self-discipline to behave professionally. Accordingly, most of the people working with safety standards have long educations at university level or equivalent. Out of 26 people whom I interviewed, and who work with safety critical software development, 20 are engineers, five are educated in computer science, and one in physics.

All knowledge builds upon tradition of some kind. In the case of safety standards, the tradition goes way beyond that which is written in the standards themselves. In the words of an experienced engineer at Cassidian:

“In order to understand the avionic standard it’s really not sufficient to have a training course of only two or three days. I think a training course is helpful, but it covers only let’s say 10 or 20 per cent – if you are very intelligent, let’s say 20 per cent – of your knowledge, but the remaining 80 per cent, it’s really experience. It’s your everyday job.” 6

The point is that it is not enough to read and study the standard alone; it is also necessary to acquire the experience to understand it. I have already mentioned the lengthy education of people who work with safety standards, which prepares them to understand the standards to some degree. The additional experience they require is what we can call exposure to the tradition of safety standards.

A specific safety standard does not exist in isolation. There are previous versions of the standard, and the standard itself is based on an older standard, and yet other standards have influenced it. The tradition of which a standard is part can be followed back in time like a trail. The main components of the tradition are nicely summed up in the Exida quote above:

“Many designers consider most of the requirements of IEC 61508 to be classical, common sense practices that come directly from prior quality standards and general software engineering practices.” 7 (My emphasis.)

The “general software engineering practices” embody a tradition of engineering of which the safety standards are also part. The education of people working with the standards familiarizes them with this part of the tradition. The other part of tradition, the “prior quality standards”, points to a long judicial tradition for regulating commerce and manufacture. The standards all have a more or less clear legal authority for deciding the validity of practices, and this authority is, per tradition, delegated to experts in the field. The history of engineering is so long and complicated that it is probably futile to try to point out exactly when engineering tradition was institutionalized for the first time.8 With the judicial tradition, it is at least safe to say that engineering practice was regulated via patent legislation as early as 1624 in England.9

The question of authority is important because it plays a central role in the hermeneutical notion of understanding. The texts of the safety standards carry great authority in themselves; but they can only do so because they have been assigned authority by outside parties. The ultimate source of authority is often the law, where national or international (European Union) legislation has adapted some standard as a requirement of professional liability. Where standards are not backed by courts, but rely solely on the agreement of industry associations, they will often be backed indirectly by courts, since there is often a legal formulation that the “state of the art” in engineering will be taken as the measure of liability, and the “state of the art” will then in practice often be taken to be the existing standards of the industry association.10

The words of the standards, however, are rarely taken to court in lawsuits, and therefore the authority of the courts are in practice delegated to other institutions, the most important being the independent assessment companies such as the German TÜV. These private institutions have authority over the interpretation of the standards in practice, as they judge whether a product satisfies the standard or not. Since there are several institutions that act in competition with each other, they do not have absolute authority individually. Another important source of authority lies with the committees of experts that define the standards. Their authority, which represents a compromise position taken by the competing companies in a particular industry, is the guarantee that the standards do actually represent “general engineering practice”, or in other words, “state of the art”. A final, local form of authority is the management of the individual company which decides what standards the company is to follow, and as such acts as a source of authority over the individual developer.

The central question in understanding how safety standards are used is that of application – what the standards are used for. The obvious answer is that the standards are used to ensure that products are safe, but this answer is too simplistic. As we have seen above, in the case of the farming systems developer Skov, it is not strictly necessary to follow standards in order to develop safety critical products. This has to do with the fact that although part of the standards’ function is to impose a certain “safe” engineering form on the product, this is not their primary function. The primary function is to act as a bureaucratic mechanism to generate documentation, by making it compulsory. The engineering manager for Wittenstein Aerospace and Simulation, a small company, describes safety critical software development thus:

“The [program] code’s a by-product of the safety critical development, the code is sort of always insignificant, compared to the documentation we generate and the design. You can spend five per cent of your time doing the coding in safety critical development, and the rest of the time on documentation, requirements, design, architecture, safety planning, evaluation, analysis, verification, and verification documents.” 11

It is of course crucial to notice that the engineering manager speaks not merely of requirements, design, and so on, but of documented requirements, documented design, documented architecture, and so on. It is clear that while documentation plays a central role in safety critical development, documentation of the development is not the same thing as safe products. To answer the question of what the application of safety standards is, we have to split the question in two: How do the standards contribute to safety? And what exactly is meant by “safety”?

The standards contribute to safety primarily by acting as a system for generating documentation, and the documentation serves as evidence for what has happened during development. This evidence serves to link the engineers’ application of standards to the courts of law from which their authority derive by means of assigning legal responsibility for every part of the development. The legal system cannot carry out its authority without being able to clearly determine legal responsibility in each case. On the other hand, the standards are linked to the engineering tradition through their insistence on repeatability. Responsibility cannot be assigned meaningfully to the engineers unless the engineers are in control of what they are responsible for, and the engineering tradition’s way of enacting control is through repeatability. In the safety standards’ assignment of responsibility, the role of the engineers is to provide the required evidence and to convince the standards’ authorities that it is sufficient. The role of the independent assessors, such as TÜV, is to provide the verdict on whether the engineers’ evidence is convincing enough.

The question of what “safety” means is the central question in understanding safety standards. In parallel with the process at Tribeflame, which could be described as a single effort to determine what “fun” means for computer game players, the whole practice of safety critical development is essentially an attempt to find out what safety means. Being pragmatic, engineers would like to define unambiguously what safety means: that is, to operationalize the concept completely. However, this is not possible. An illuminating definition from an article about integrating unsafe software into safety critical systems reads:

“Unsafe software in the context of this article means: Software that does not fulfil the normative requirements for safety critical software (especially those of IEC 61508).” 12

This definition is both pragmatic and operational, but it does not say much about the nature of safety. Defining safety as that which fulfils the standards does not help to explain why the standards are defined as they are.

We saw that repeatability is central to the standards. Repeatability is expressed in the safety standards through underlying probability models. These models are present in terms such as “failure rate” and “safe failure fraction”, which combine to form the measure for safety – SIL (Safety Integrity Level). Thus, the safety standards’ notion of safety is a probabilistic one.13 What is probabilistic, however, is never certain, and so safety becomes not a question of whether accidents can be avoided altogether, but rather a question of how much one is prepared to pay in order to try to avoid an unlikely event. In that way, safety becomes a question of cost-effectiveness. In the words of a senior principal engineer for the microcontroller manufacturer Infineon:

“The whole key in automotive is being the most cost effective solution to meet the standards. Not to exceed it but only to meet it. So you can put all the great things you can think of inside … [but] if it means more silicon area in the manufacturing, and it’s not required to meet the standard, then you’re not cost competitive anymore.” 14

In a hermeneutical perspective, understanding always happens as a result of a fusion of horizons of understanding. So in order to find areas where a lot is happening in terms of understanding, one has to identify the places where horizons of understanding changes a lot. In the world of safety critical standards, horizons change relatively little.15 Once a horizon of understanding of the standards is established, it serves as a common language or a shared set of basic assumptions between the involved parties, making it easier to understand, and communicate with, each other. A quote from the software director of the elevator company Kone illustrates how the development models of the standards serve as a common language between developer and certifier:

“Waterfall [i.e. the V-model] is definitely easier [to follow] for the notified body, for the people who are giving the certificate. Waterfall is easier for them because there is a very easy process, because you have steps, you have the outputs of each phase, and then you have the inputs for the next phase, so it’s very easy to analyse.” 16

This shared horizon of understanding is a practical reality, but it is also an ideal; the ideal essentially being that a well-written standard should not need to be interpreted. However, this ideal is contradicted by reality, in which the shared understanding is not, after all, universal. This can be seen by the fact that it is not uncommon that the companies in safety critical industries have to educate their customers: to teach them the proper understanding of the standards, and to teach them “to see the intent behind the standard and not just the words on the page.” 17

This education of customers works to bring about a shared understanding if the people who need to be educated are prepared to accept the assumptions and prejudices of the safety standards. However, if their values are in conflict with the assumptions of the standards, a mismatch will occur. The principal engineer from Infineon sees a conflict between creative engineers and the standards:

“Engineers like to create, they like to be inventive, they like to experiment … Consequently engineers don’t like the standard. They don’t want to adopt it, it makes their lives more painful, it makes them do more jobs they don’t want to do. So they don’t see any value in it because they think they’re perfect engineers already.” 18

The conflict in this case is primarily with the implicit assumption of the standards, that documentation is the way to engineer a safe system. When, as the Wittenstein engineering manager says, 95 per cent of the safety critical engineering job consists of documentation, this can be a real point of contest with other traditions that do not value documentation as highly. An example of other traditions are the Agile development philosophies, illustrated by the company Skov, which does “not produce documentation for the sake of documentation”.19

|

Hermeneutical concept |

Results |

|

Prejudice |

Engineering problem solving. The assumptions that problems can be reduced to simple sub-problems, and that development can be controlled. |

|

Authority |

Ultimately the courts of national and international law. More immediately industry associations and official certifying bodies. |

|

Received tradition |

A trail of standards. Institutions that preserve engineering knowledge and legal oversight of industrial production. |

|

Personal reverence |

Largely replaced by reverence towards institutions and a professional identity. A consequence of the bureaucratic character of standards. |

|

Pre-understanding |

Knowledge of engineering and software engineering terms, and programming techniques. Familiarity with the legalistic language of safety standards. |

|

Understanding |

Safety critical programming rests on both engineering and judicial tradition, and it is necessary to understand both. |

|

Hermeneutical circle |

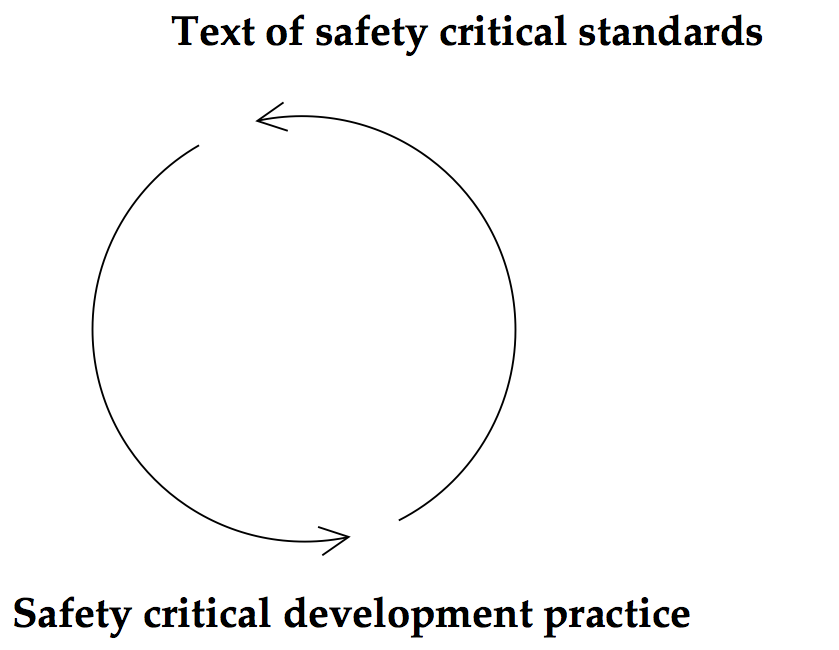

The regulatory texts of the standards and the experiences of engineering practice presuppose one another. |

|

Effective history |

Largely absent. |

|

Question |

What “safety” means. The safety concept is founded on probability, which as a consequence leads to a search for cost-effective solutions. |

|

Horizon of understanding |

Horizons change relatively little compared to e.g. game programming. The safety standards and process models function as a common language between practitioners. |

|

Application |

To exert control over development processes through the production of documentation: a bureaucratic mechanism. Control is a prerequisite for placing responsibility, which is demanded by courts of law. |

Figure 5.6 – A schematic summary of the principal results of analysing safety critical programming with hermeneutical theory.

In order truly to understand the safety standards, it is necessary to understand why they are written the way they are, and this requires understanding both the engineering side and the judicial side of the way they function. In the process by which the standards are created, and in the work practices of safety critical development, we see a remarkable degree of influence between the text of the standards, which resemble law texts, and the practical engineering experience that comes from working with the standards. The influence constitutes a hermeneutical circle between the regulatory text of the standards and the engineering practice being regulated (see Figure 5.5). To understand one, it is necessary to understand the other. Indeed, it is practically impossible to understand the standard just by reading it, without prior knowledge of the engineering practice to which it refers. Likewise, the engineering practice of safety critical development is directed by the standards to such a degree that it would be hard to make sense of the developers’ work practices without knowledge of the standards.

To consider the effective history of safety standards means acknowledging that they have grown out of certain traditions and that the problems they solve are the problems of those traditions: first and foremost problems of engineering, and the assignment of legal responsibility. It is to acknowledge that there are limitations to the problems that can be solved with safety standards, and that the notion of safety implied by the standards is a specific one – safety in this sense means to fulfil the requirements of the standards in a cost-effective way, and to avoid certain classes of engineering malfunctions with a certain probability. As was the case with game development at Tribeflame, there is very little explicit reflection upon effective history within the safety critical industries. Safety critical developers are of course aware that their practices and actions have important consequences in society, but they tend to regard their own actions wholly from within the thought world of safety critical development.

To summarize this hermeneutical analysis of safety critical programming, some of the results are presented in schematic form in Figure 5.6.

1 See Section 6.1 (Cultural theory: practice and form).

2 Medoff & Faller 2010 p. 2.

3 Some common programming languages, most notably C, lack automatic memory management, but it is only the most hardware-centric programming languages that entirely lack the possibility of allocating memory dynamically. An example is the original definition of Occam, the Transputer programming language.

4 Weber 1922 [2003] p. 66.

5 Ibid. p. 65.

6 Interview 24.

7 Medoff & Faller 2010 p. 2.

8 Though it was probably in the military, since most early engineering applications were military – in contrast to the later civil engineering.

9 Cardwell 1994 p. 106. An example of a more recent institution that is closer in function to the standards (though distinct from them) is the Danish special court of commerce and maritime law, Sø- og handelsretten, which was established in 1862.

10 Interview 32.

11 Interview 25.

12 “ ‘Nicht-sichere Software’ – das bedeutet im Kontext dieses Artikels: Software, die nicht die normativen Anforderungen (insbesondere der IEC 61508) an sicherheitsgerichtete Software erfüllt.” Lange 2012 p. 1.

13 Interestingly, it is difficult to apply a probabilistic failure model to software, because in principle software either works or does not. As explained in a presentation by the engineer William Davy, there is no such thing as “mean time to failure” in software, nor a notion of structural failure due to stress on materials, for example (see list of source material).

14 Interview 31.

15 This is because practitioners have very similar backgrounds. Contrast with the practice of Tribeflame in which horizons change relatively more as the developers seek to expand their horizon to merge with those of their future customers, a mass of largely unknown players.

16 Interview 29.

17 Interview 31.

18 Interview 31. The conflict between creativity and adherence to process can be resolved by regarding, not the product, but the process, as a target for creativity. This seems to be appropriate for safety critical companies that are highly process oriented, such as Wittenstein: “We like to think we don’t have a quality system. We have a business manual quality, and the way we run our business is running the same thing – so, we operate a business. And these processes are how we operate our business.” Interview 25. The same point is made about the development of the Space Shuttle software in a journalistic essay by Charles Fishman from 1997: “People have to channel their creativity into changing the process … not changing the software.”